DevOps Interview Question and Answer

Most Common DevOps Interview Question and Answer

1. What is DevOps?

2. What are the benefits of DevOps?

3. What are the challenges of implementing DevOps?

4. What are the roles and responsibilities of a DevOps engineer?

5. What are the top DevOps tools?

6. What is version control in DevOps?

7. What is continuous integration?

8. What's the difference between continuous delivery and continuous deployment?

9. What is infrastructure as code (IaC)?

10. What is containerization?

Basic-level DevOps Interview Question

Let us start with the basics and unlock the insights of beginner-level DevOps Interview Question and answer thoughtfully curated by DevOps experts to pave your path to success.

1. What is DevOps?

DevOps is an advanced methodology that seamlessly combines the fields of software development and IT operations. It's all about encouraging collaboration and streamlining between these domains to optimize the software delivery process. The ultimate aim is to automate and harmonize the various steps involved in crafting, testing, deploying, and monitoring software applications.

2. What are the benefits of DevOps?

DevOps presents a number of advantages, spanning from expediting software delivery and fostering inter-team collaboration to amplifying software release quality and dependability. It expedites issue resolution, elevates efficiency through automation, and closely aligns with business objectives. At the core, DevOps empowers organizations to deliver top-notch software swiftly, enhances operational agility, and strengthens customer satisfaction through its holistic approach.

3. What are some common misconceptions about DevOps?

Common Misconceptions about DevOps are:

- DevOps is a Tool: One misconception is viewing DevOps as a single tool or technology. DevOps is a culture, practices, and collaboration philosophy, with tools being enablers, not the core.

- It's Only for Developers: Contrary to the name, DevOps involves both development and operations teams working collaboratively to achieve efficient software delivery.

- It Eliminates Operations Roles: DevOps doesn't eliminate operations roles; it transforms them. Operations still play a vital role in ensuring the reliability and stability of the systems.

- It's All About Automation: Automation is a crucial component, but DevOps also emphasizes collaboration, cultural change, and process improvement.

- Standardization of Tools: DevOps doesn't mandate a specific set of tools. Organizations can choose tools that best fit their needs if they support collaboration and automation.

- Instant Results: Achieving DevOps benefits takes time. It's a journey that requires organizational alignment, cultural shifts, and continuous improvement.

- DevOps Equals Continuous Deployment: DevOps emphasizes continuous integration and delivery, but continuous deployment might only be suitable for some applications.

- No Need for Documentation: While DevOps values code over documentation, documentation remains essential for knowledge sharing, onboarding, and compliance.

4. What is the role of AWS in DevOps?

In the world of DevOps, Amazon Web Services (AWS) plays a crucial role, offering a solid cloud foundation and a range of services that empower automation, scalability, and smooth deployment. These AWS offerings are the backbone for successful DevOps practices like continuous integration, continuous delivery, and infrastructure as code. Some essential AWS services for DevOps include Amazon EC2, AWS Lambda, Amazon S3, AWS CodePipeline, AWS CodeBuild, AWS CodeDeploy etc.

5. What are your thoughts on the future of DevOps?

The future of DevOps shines brightly. As our world becomes increasingly digitized, the demand for swift and dependable software development is set to surge. DevOps is a proven method to meet this demand, gaining attention across businesses of all sizes.

Here are some of the key trends that I think will shape the future of DevOps:

- Cloud Computing's Ascendance: The widespread embrace of cloud computing simplifies and economizes application deployment and scaling. This dynamic compels the need for DevOps practices, empowering swift and effective application adjustments in cloud environments.

- AI and Machine Learning's Surge: The rise of AI and machine learning automates various DevOps tasks like testing, deployment, and monitoring. It empowers DevOps Engineer to focus on strategic endeavors, such as optimizing the efficiency of the DevOps pipeline.

- Elevated Security Emphasis: Security's significance is on the rise within DevOps. Organizations must ensure the security of their applications against potential breaches. DevOps practices contribute by automating security patch deployment and integrating security assessments into the development lifecycle.

6. What is version control in DevOps?

Version control in DevOps is the practice of tracking changes made to code, documents, or any files in a systematic way. It enables multiple contributors to collaborate on a project, keeps a history of changes, helps in identifying and resolving conflicts, and ensures the availability of a reliable source of truth.

7. What is continuous integration?

Continuous Integration (CI) is a DevOps practice where developers frequently integrate their code changes into a shared repository. Each integration triggers automated tests and checks, ensuring that new code integrates smoothly with the existing codebase and does not introduce issues.

8. Difference between continuous delivery and continuous deployment?

Continuous delivery is the practice of automatically preparing code changes for deployment to production. Continuous deployment, on the other hand, goes a step further by automatically deploying those changes to production once they pass automated testing. Continuous delivery allows for manual approval before deployment, while continuous deployment does not require manual intervention.

9.What is infrastructure as code (IaC)?

Infrastructure as Code (IaC) is a pivotal DevOps practice where infrastructure setup and configuration are expressed through code, enabling automated provisioning and management. It treats infrastructure programmatically, ensuring consistency and reproducibility while minimizing manual intervention. This practice harmonizes development and operations, accelerating deployment and minimizing configuration errors.

10. What is containerization?

Containerization is a technology that encapsulates an application and its dependencies into a lightweight, portable unit called a container. Containers provide isolation, making applications consistent across different environments and enabling efficient deployment, scaling, and management. Docker is a popular tool for containerization.

Intermediate-level DevOps Interview Question

As we advance on our DevOps journey, we must move beyond the basics and explore the mid-level DevOps Interview Question and answer. These questions are specially prepared according to the requests we have been receiving throughout to progress your knowledge to the next level. Also, by enrolling in our DevOps Training course every individual will be experiencing the mock interview Preparation from the experts.

1. What is the difference between Agile and DevOps?

While both Agile and DevOps aim to enhance software development, they focus on distinct aspects.

Agile centers on iterative development, fostering customer collaboration and adapting to change. DevOps extends this by integrating development and operations, emphasizing automation, continuous delivery, and collaboration across the entire software lifecycle. Agile accelerates development cycles, while DevOps optimizes deployment and operations, ensuring a seamless and efficient end-to-end process.

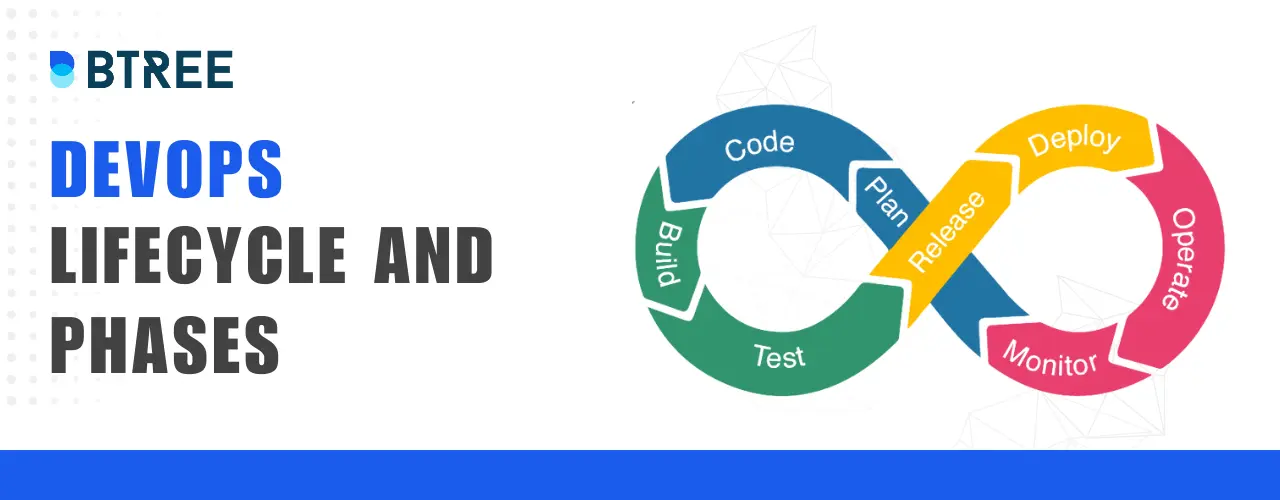

2. Explain the different phases of the DevOps lifecycle.

The DevOps lifecycle comprises various phases: Plan, Code, Build, Test, Deploy, Operate, and Monitor.

Planning involves defining objectives, while coding involves writing and reviewing code. Building entails compiling code and creating binaries. Testing validates functionality, and deployment ensures a smooth transition to production. Operations manage the deployed application while monitoring and tracking its performance. This continuous cycle emphasizes collaboration, automation, and feedback, enhancing software delivery and maintenance.

3. How does DevOps differ from traditional software development?

DevOps differs by integrating development and operations teams, promoting collaboration throughout the software lifecycle. Unlike traditional methods, DevOps emphasizes automation, continuous integration, and deployment. Traditional approaches focus on sequential phases and can result in longer release cycles. DevOps enables a culture of collaboration, transparency, and efficiency, leading to faster, more reliable releases that meet user needs and business goals.

4. How can DevOps improve the speed and quality of software delivery?

DevOps achieves this by automating processes like testing, integration, and deployment, reducing manual errors and cycle times. Collaboration between teams ensures faster feedback and issue resolution. Continuous monitoring enhances product quality by identifying and addressing issues early. This streamlined approach leads to frequent, more minor releases that can be quickly adapted based on user feedback, ensuring both speed and higher-quality software.

5. How do you use configuration management to automate deployments?

Configuration management tools like Ansible or Puppet automate the setup and configuration of infrastructure. By defining configurations as code, these tools ensure consistency and repeatability.

Automated deployments are achieved by scripting infrastructure setup, application installation, and configuration changes. This approach reduces human errors, minimizes configuration drift, and enables rapid, reliable deployment and scaling.

6. How does automation contribute to faster and more reliable software releases?

Automation reduces manual intervention in tasks like testing, building, and deploying code, thereby accelerating the entire release process. It ensures consistency across environments, minimizing configuration errors. Automated testing validates code changes swiftly, identifying bugs earlier in the development cycle. Continuous integration and deployment pipelines, driven by automation, enable quick, reliable, and repeatable software releases.

7. Name a few Continuous Integration tools.

Here are a few Continuous Integration tools that are widely used:

- Jenkins: Known for its extensibility, Jenkins automates the build, test, and deployment processes, integrating with various plugins and technologies.

- Travis CI: This cloud-based CI tool offers seamless integration with GitHub repositories, enabling automated testing and deployment workflows.

- CircleCI: CircleCI provides fast and parallelized builds and tests, making it suitable for continuous integration in modern software development.

- GitLab CI/CD: Integrated within GitLab, this tool enables automated pipelines for code integration, testing, and deployment, enhancing collaboration.

- Bamboo: Atlassian's Bamboo automated builds, tests, and deployments, serving to teams using Jira and other Atlassian products.

8. What is microservices architecture?

Microservices architecture is a software design method where applications are constructed as a collection of small, independent services that communicate through APIs. Each service focuses on a specific function and can be developed, deployed, and scaled independently.

9. What are some of the benefits and challenges of microservices architecture?

Benefits: Microservices architecture offers enhanced scalability, agility, and independent service deployment. It facilitates easier technology adoption and innovation, accelerates development, and supports better fault isolation. Teams can work on different services concurrently, enabling faster updates and releases.

Challenges: Managing inter-service communication complexity, ensuring data consistency across services, and dealing with potential service failures pose challenges. Microservices require comprehensive monitoring, and the orchestration of deployments can be intricate. Version compatibility and the potential for increased operational overhead are also considerations.

10. Describe the concept of "shift-left" in the context of DevOps.

"Shift-left" in DevOps refers to moving tasks and activities earlier in the software development lifecycle. It entails integrating testing, security, and quality checks as early as possible, typically during the development phase. This approach identifies and rectifies issues at their inception, reducing the chances of defects or vulnerabilities later in the process. "Shift-left" promotes collaboration, enhances code quality, and accelerates feedback loops, resulting in more robust and secure software releases.

Our Lovely Student feedback

Expert-level DevOps Interview Question

As we dig deeper into DevOps, we must tackle the advanced-level DevOps Interview Question and answer. Here, we will look into the frequently asked questions of DevOps that interviewers often present.

1. How can you ensure the security of your DevOps pipelines?

Ensuring the security of DevOps pipelines involves several strategies. By combining the below-given practices, a secure DevOps pipeline can be established, minimizing risks and upholding the safety of the entire software development lifecycle.

Access Controls: Set up stringent access controls through role-based permissions to limit unauthorized access to pipeline resources.

Encryption: Employ encryption techniques to safeguard sensitive data like credentials and configurations.

Regular Updates: Keep all tools, libraries, and dependencies up-to-date to mitigate vulnerabilities and security gaps.

Vulnerability Scanning: Integrate continuous vulnerability scanning to identify potential security issues in the pipeline components.

Penetration Testing: Conduct routine penetration testing to simulate real-world attacks and rectify any weaknesses.

Version Control: Utilize version control for pipeline configurations and scripts to track changes and ensure accountability.

Authentication: Implement strong authentication methods, including multi-factor authentication, to prevent unauthorized access.

Monitoring: Set up robust logging and monitoring mechanisms to detect and respond to unusual activities promptly.

2. What are the benefits of using Jenkins for continuous integration?

Jenkins offers numerous benefits, including extensive plugin support enabling integration with various tools. It allows for the automation of repetitive tasks, accelerating the build-test-deploy process. Jenkins provides many built-in integrations for source control, testing frameworks, and deployment platforms. Its open-source nature ensures flexibility, customization, and community support, making it a popular choice for building robust CI/CD pipelines.

3. How does Ansible differ from Puppet and Chef?

Ansible, Puppet, and Chef are configuration management tools, but they differ in several ways.

- Ansible: Ansible is agentless, utilizing SSH (Secure Socket Shell) for communication. It emphasizes simplicity and ease of use, with tasks written in yet another markup language or YAML. Ansible enforces idempotence, ensuring the desired state regardless of previous configurations.

- Puppet: Puppet employs agents to manage configurations and deployments. It uses a declarative language to define desired states. Puppet excels in advanced features and offers a rich ecosystem of modules.

- Chef: Chef also uses agents and employs a declarative approach with its own domain-specific language. It focuses on providing flexibility and allows for custom scripting in recipes and resources.

4. What are the advantages of using Docker over virtual machines?

Using Docker offers several advantages over traditional virtual machines:

- Resource Efficiency: Docker containers share the host OS kernel, resulting in lower resource overhead than separate virtual machine instances.

- Faster Startup: Containers start much faster than virtual machines, enabling quicker deployment and scaling of applications.

- Consistency: Docker ensures consistent environments across development, testing, and production, reducing "it works on my machine" issues.

- Isolation: Containers offer process-level isolation, making them lightweight while providing sufficient isolation between applications.

- Portability: Docker images are portable and can run on any system that supports Docker, regardless of the underlying infrastructure.

- Versioning: Docker images can be versioned, simplifying application packaging, distribution, and rollbacks.

- Ecosystem: Docker has a rich ecosystem of images and tools, making it easier to find and share pre-configured containers and automate processes.

5. Describe the differences between Docker Compose and Kubernetes. When would you choose one over the other?

Docker Compose: Docker Compose is a tool for defining and managing multi-container applications. It uses a simple YAML file to specify services, networks, and volumes. Compose is designed for single-host environments and simplifies spinning up interconnected containers. It lacks features for scaling, load balancing, and high availability.

Kubernetes: Kubernetes is a powerful container orchestration platform that automates containerized applications' deployment, scaling, and management. It offers advanced features like automatic scaling, load balancing, rolling updates, and self-healing. Kubernetes is suitable for complex microservices architectures and larger deployments.

Choosing Between Them: Use Docker Compose for smaller applications or local development environments where simplicity and rapid setup are priorities. Choose Kubernetes for large-scale, production-grade deployments requiring high availability, scaling, and advanced orchestration capabilities.

Docker Compose is a good choice if your application is simple and you want an easy setup. For complex and production-level deployments with scalability needs, Kubernetes is the better option.

6. What are the benefits and challenges of using GitOps?

Benefits of Using GitOps:

- Version Control: GitOps leverages version control systems like Git to manage infrastructure and application configurations, providing an audit trail and enabling rollbacks to previous states.

- Declarative Configurations: Infrastructure is defined declaratively, ensuring that the desired state is maintained, reducing configuration drift and human errors.

- Automation: GitOps automates deployments based on Git repository changes, eliminating manual intervention and minimizing human errors.

- Collaboration: GitOps allows teams to work on the same infrastructure configurations and application code using familiar Git workflows.

- Consistency: By relying on a source of truth in Git repositories, GitOps ensures consistent and reproducible deployments across environments.

Challenges of Using GitOps:

- Learning Curve: Teams unfamiliar with Git and GitOps concepts might face a learning curve in adopting the methodology.

- Repository Management: Maintaining repository hygiene, access controls, and organization becomes crucial as repositories grow.

- Security Risks: Inadequate access controls to repositories could lead to security vulnerabilities if unauthorized changes are made.

- Complexity: Setting up and managing GitOps pipelines and workflows for highly complex environments might be intricate.

- Lack of Real-time Control: GitOps relies on repository changes for deployments, which might not be suitable for scenarios requiring immediate adjustments.

7. Can you explain how to use version control systems like Git and how to handle branching and merging?

Branches are essential in Git for managing different lines of development and isolating changes. Here's how you can handle branching and merging:

Creating a Branch:- To create a new branch: git branch new-branch-name

- To switch to the new branch: git checkout new-branch-name or git switch new-branch-name

Working on a Branch:

- Make changes to the code in your branch.

- Commit your changes using: git commit -m "Commit message"

Merging Branches:

- To merge changes from one branch into another:

- First, switch to the target branch: git checkout target-branch

- Then, merge the source branch: git merge source-branch

- Git will attempt to merge changes automatically. If there are conflicts, you need to resolve them manually.

Handling Conflicts:

- Conflicts occur when Git can't automatically merge changes due to conflicting modifications in the same code area.

- Git will mark conflicts in the affected files. Open each file, resolve the conflicts, and commit the resolved changes.

Pushing and Pulling:

- To push your local branch to a remote: git push origin branch-name

- To fetch changes from a remote: git fetch origin.

- To pull changes from a remote into your current branch: git pull origin branch-name

Deleting a Branch:

- Once a branch is no longer needed, you can delete it:

- Locally: git branch -d branch-name

- Remotely: git push origin --delete branch-name

8. How would you use containerization technologies like Docker or Kubernetes to deploy and manage applications?

Here is a breakdown of using Docker and Kubernetes for deployment:

Docker:

- Create a Dockerfile describing how to build your application's Docker image.

- Build the Docker image using docker build

- Push the image to a container registry.

- Use the image to deploy containers consistently across different environments.

Kubernetes:

- Define application deployment and service configurations using Kubernetes manifests (YAML files).

- Apply manifests using kubectl.

- Kubernetes orchestrates container deployment, scaling, and management based on the defined configurations.

9. Can you write a script that checks the availability of a website and sends an email notification if it is down?

import requests

import time

import smtplib

def check_website(url):

try:

response = requests.get(url)

if response.status_code == 200:

return True

except requests.ConnectionError:

return False

def send_email(subject, body, to_addr)::

gmail_user = 'your_gmail_username'

gmail_password = 'your_gmail_password'

with smtplib.SMTP('smtp.gmail.com', 587) as smtp:

smtp.ehlo()

smtp.starttls()

smtp.login(gmail_user, gmail_password)

smtp.sendmail(gmail_user, to_addr, f'Subject: {subject}\n\n{body}')

def main():

url = 'https://www.example.com'

url = 'https://www.example.com'

if not check_website(url):

subject = 'Website is down'

body = f'The website {url} is down.'

send_email(subject, body, to_addr)

if __name__ == '__main__':

main()

The provided script utilizes essential modules, including requests, time, and smtplib, and defines two functions: check_website() and send_email(). The check_website() function verifies if a URL is operational, and send_email() uses SMTP to dispatch an email with a given subject and body to a specified recipient.

The script's main function, main(), serves as the starting point. It expects a website URL for monitoring and an email address for notifications. Upon invoking the check_website() function to assess the website's status, if it's found to be inaccessible, the send_email() function is employed to alert the recipient.

This is just a basic example of a script that can be used to check the availability of a website and send an email notification if it is down. You can customize it to fit your specific needs.

10. What is AWS CodeBuild, and how does it integrate with other DevOps tools?

AWS CodeBuild is a managed build service provided by Amazon Web Services (AWS) that automates the process of compiling source code, running tests, and producing deployable artifacts.

Integration with Other DevOps Tools: AWS CodeBuild seamlessly integrates with various other DevOps Tools to enhance the CI/CD workflow:

- AWS CodePipeline: CodeBuild is often used as a build step within AWS CodePipeline, orchestrating the entire CI/CD process. It automates code building, testing, and deployment as part of the pipeline.

- Source Code Repositories: CodeBuild integrates with source code repositories like GitHub, Bitbucket, and AWS CodeCommit. When code changes are pushed to these repositories, CodeBuild can be triggered to initiate the build process.

- Artifact Repositories: After building and testing the code, CodeBuild generates artifacts. These artifacts can be stored in repositories like Amazon S3 or AWS CodeArtifact for later deployment or distribution.

- Deployment Tools: Once artifacts are generated, they can be seamlessly deployed using tools like AWS CodeDeploy, AWS Elastic Beanstalk, or Kubernetes.

- Docker Integration: CodeBuild can also work with Docker containers, allowing you to build and test applications within Docker images, making it compatible with container-based DevOps workflows.

Conclusion

DevOps interviews can be challenging but they can also be quite rewarding. The range of questions, which are tailored to different skill levels and cover anything from fundamental ideas for beginners to complex automation tactics for skilled practitioners, illustrates the many facets of DevOps. Preparing for these interviews not only demonstrates technical skill but also emphasizes the significance of seamless communication between development and operations in contemporary software engineering.